| Home | About | Software | Documentation | Support | Outreach | Ecosystem | Dev | Awards | Team & Sponsors | |

SGE Transfer Queue for Globus and GridWay Documentation

License

Copyright © 2002-2007 GridWay Team, Distributed Systems Architecture Group, Universidad Complutense de Madrid.

Licensed under the Apache License, Version 2.0 (the “License”); you may not use this file except in compliance with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an “AS IS” BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

Any academic report, publication, or other academic disclosure of results obtained with this Software will acknowledge this Software's use by an appropriate citation.

Key Concepts

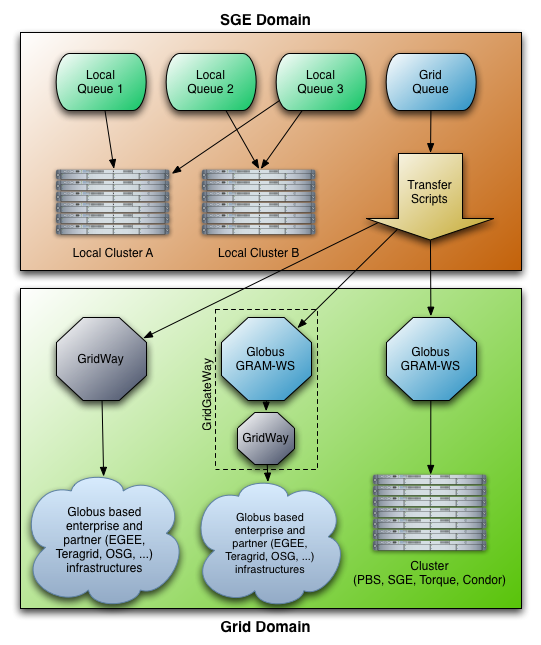

This component is twofold. One set of scripts enables SGE to submit jobs to a WS-GRAM service, as that provided by Globus Toolkit 4.0. Implicitly this enables to send a job to any underlying LRMS, including an encapsulated GridWay (GridGateWay). The other set offers similar functionality but for GridWay. The basics behind these components can be summarize in the creation of a new queue in SGE. This queue will have scripts attached to each possible operation: starter, suspend, resume, terminate. In turn, these scripts interact with either Globus or GridWay, ensuring that the job is correctly executed in them.

This figure shows SGE with one transfer queue configured. In the SGE domain we find the normal SGE local queues and a special Grid queue (that can be configured to be available only under certain special conditions), that enables SGE to submit jobs to a WS-GRAM service or to GridWay. This WS-GRAM service can, in turn, virtualize a cluster (with several known LRMS) or it can encapsulate a GridWay (thus conforming a GridGateWay) that gives access to a globus based enterprise or partner infrastructure.

A Single Execution Explained

This section explains how a normal single execution flow is carried around. It intends to give you an idea of what is going to happen once you set up the SGE's transfer queue and start submitting jobs to it.

We will start from the local node, see how the job is submitted, what will happen in the remote execution host and what will be doing the local node again before the execution is finish and ready for the user.

Local Machine

When the qsub command is executed to send a job to the Globus transfer queue, a transfer_starter.sh script is executed. First thing it does is to modify the job script that is being submitted. Some changes are performed so it will work in the Globus environment. Afterwards, all the job input files and the mentioned script are tarred into a tarball in SGE's provided temp folder. Once this tarball is done, a RSL description is created and submitted to the GRAM server, using a wrapper script (created as well by the transfer_starter.sh) as the executable. The globus command used for the submit blocks until the execution is completed. Meanwhile, the job will be executed in the remote machine.

Remote Machine

In the remote execution path a directory is created by the wrapper. The tarball is then untarred in this directory. Then the modified job script that was inside the tarball is executed (here is where the real job is executed). When the job is done, the wrapper will erase the original tarball, copy both STDOUT and STDERR files in the job directory and generate a new tarball out of that directory. Then, this tarball is transferred back to the local machine.

Local Machine

Once Globus reports the job as done, the transfer_starter.sh script will create yet another new directory with the contents of the output tarball. After this is done, it will append the STDOUT and STDERR files to the SGE equivalent files. Also the scripts, RSL or GridWay job template and output files are moved from the directory where the qsub is performed to this new directory, so the user will have all the clutter removed from their directory.

Installation Guide

SGE to Globus Configuration

To install this component you need an SGE execution node sharing homes with the main cluster and with Globus and GridWay installed (if using GridWay transfer queues). This node can be an existing cluster node with Globus/GridWay installed, an already installed Grid machine with execd running and sharing homes with SGE cluster or a newly installed node with all the requisites. These are the steps needed to setup the transfer queue:

- Install Globus in the selected execution node.

- Install GridWay in the execution node. (if you are configuring a GridWay transfer queue)

- Add transfer scripts to the execution node.

- Configure a queue to use execd from the selected execution node and to use transfer scripts.

The globus installation is used just as a client to send jobs, it has not to be a fully configured Globus with resources.

The execd daemon should be installed in your execution node. You just will need to configure it to start at boot time. This daemon should be installed in the same host where globus is configured as there is where the transfer scripts are going to be executed.

Transfer Scripts

There are four transfer scripts by queue, although we are only using two:

- Starter: this is the script that SGE calls when it wants to run a job, when this script exits the job is done.

- Terminate: called when you issue a qdel command to a job.

- Suspend: this scripts stops a running job.

- Resume: awakes an already suspended job.

We are not using Suspend nor Resume scripts, making this scripts work with Globus is outside our scope.

To install the scripts we select a directory inside SGE installation called GlobusScripts or GridWayScripts where the files reside. You have to edit the files transfer_starter.sh and transfer_terminate.sh so it has the correct configuration parameters. These are:

- Globus host where to send the job. (if using Globus transfer queue)

- Globus location.

- GridWay location. (if using GridWay transfer queue)

Configuring the Queue

To create a new queue you have to issue the command:

$ qconf -aqThen an editor is opened with a default queue configuration where you have to change some parameters to suit your needs.

The following list describes the special configuration options you have to take into account to configure the queue:

- hostlist: here goes the name of the master node, this is where the scripts are called.

- qtype: leave just BATCH type as it can not run interactive jobs.

- slots: select a number bellow grid resources number.

- starter_method: path to transfer_starter.sh.

- suspend_method: path to transfer_suspend.sh.

- resume_method: path to transfer_resume.sh.

- terminate_method: path to transfer_terminate.sh.